TikTok has unveiled a new set of filters and features to offer more ways to minimize undesired exposure in the app, amid multiple inquiries into how it protects younger users.

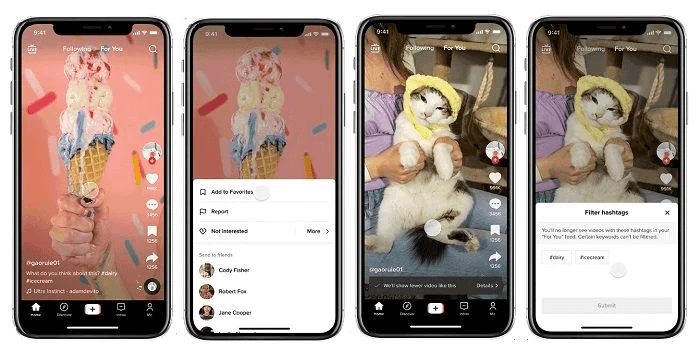

To begin with, TikTok has introduced a new feature that allows users to automatically filter out videos that contain terms or hashtags that they do not want to see in their feed.

Now, when you action a clip, you may block particular hashtags using the “Details” page, as you can see in this example. Therefore, you can now specify in your preferences that you do not want to see any more videos with the hashtag #icecream for whatever reason (for example TikTok users). You may also block content with certain key terms in the description.

Which is inadequate since the system only recognises what users manually enter in their description notes rather than the real material. Therefore, if you had an ice cream phobia, there’s still a risk that the app could expose you to unsettling visuals, but it does offer a new approach to govern your experience.

According to TikTok, all users will have access to the feature “over the next few weeks.”

Additionally, TikTok is extending its restrictions on the exposure of content linked to potentially dangerous subjects, such as dieting, intense exercise, and grief, among others.

In December of last year, TikTok began a fresh round of studies to see whether it might perhaps lessen the negative effects of algorithm amplification by reducing the number of videos in particular, delicate categories that are emphasized in users’ “For You” Feeds.

The project is currently moving on to the next phase.

“As a result of our tests, we’ve improved the viewing experience so that viewers now see fewer videos about these topics at a time. We’re still iterating on this work given the nuances involved. For example, some types of content may have both encouraging and sad themes, such as disordered eating recovery content.”

This is an intriguing field of research since it basically aims to prevent people from falling into informational black holes on the internet and becoming fixated on potentially hazardous parts. Limiting the amount of information users may access at once may have a favorable effect on user behavior.

Finally, TikTok is also developing a new system of content ratings, which would assign TikTok clips to movie categories.

“In the coming weeks, we’ll begin to introduce an early version to help prevent content with overtly mature themes from reaching audiences between ages 13-17. When we detect that a video contains mature or complex themes – for example, fictional scenes that may be too frightening or intense for younger audiences – a maturity score will be allocated to the video to help prevent those under 18 from viewing it across the TikTok experience.”

The same detection mechanism that TikTok has used to help advertisers avoid pairing their promos with distressing content may be used here to better guard against mature themes and material.

However, it would be interesting to learn how TikTok’s technology specifically detects this type of content.

What kind of entity identification system is in place on TikTok, and what kinds of videos can its AI algorithms truly flag based on what criteria?

The system on TikTok might be quite sophisticated in this regard, which explains why its algorithm is so successful at keeping people reading because it can identify the essential components of material that you’re more likely to engage with based on your past activity.

It appears that TikTok’s technology is getting quite good at identifying additional elements in uploaded films. The more entities TikTok can detect, the more signals it has to match you with clips.

As previously said, the modifications come as TikTok continues to come under fire in Europe for failing to restrict the exposure of underage users to explicit content. After an EU probe deemed TikTok to be “failing in its obligation” to safeguard youngsters from covert advertising and harmful content, the company promised last month to revise its brand content regulations. On a different front, reports have also indicated that many children have suffered serious injuries, and some have even died, while participating in risky activities spurred by the programme.

It would be fascinating to see if these new capabilities will assist reassure regulatory organizations that TikTok is doing everything possible to keep its youthful audience safe in additional ways. TikTok has also developed steps to combat this.